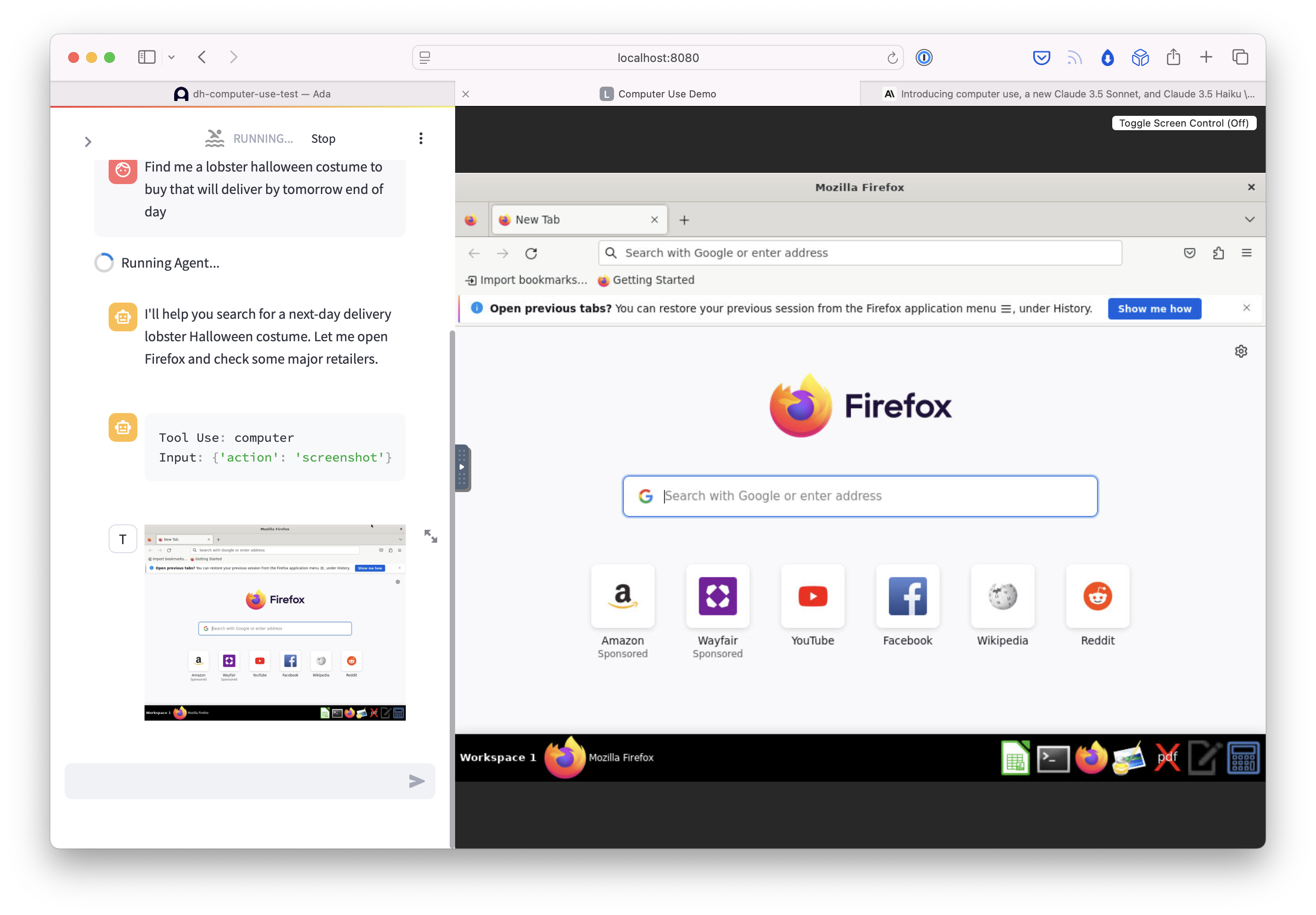

I just tried out Anthropic’s Computer Use demo Docker image. The way it works is it spins up a virtual machine in Docker and connects it through noVNC to a Streamlit app, also hosted by the Docker image. To use it, you type instructions in the Chat box and then the model decides what to do. It seems to be in control of when to take screenshots as a discrete action as well as when and where to interact with the virtual machine. It knows that it has access to Firefox and bash to run shell commands.

I wanted to see if it could add a knowledge base to one of our bots. The high-level idea being “can this LLM agent build another LLM agent”?

It was able to add a knowledge base for anthropic.com and even evaluate the agent’s performance on a few example questions it came up with. But, when I asked it to add guidance based on its evaluations, it stumbled over the instruction and got lost in writing bash commands to launch Firefox. Here’s a list of things I saw it stumble over:

- Understanding how to dismiss modals like our “test chat” modal by clicking outside of it

- Clearing inputs before pasting text (it just keeps pasting on top of its previous text)

- Scrolling elements with overflowing content. It seems to only be able to issue

Page_UpandPage_Downcommands (?) - Handling crashes in an app (like Firefox)

So, overall, I’m pretty impressed. It’s the best demo of its kind that I have seen and one of the first I’ve been able to run on my own machine. In a few iterations, I think this could be a pretty big deal for RPA.